最先在李沐大神在B站的分享中了解到autogluon,它是一个自动机器学习工具,可用于文本图片识别、表格任务等。据说效果非常不错–号称3行代码打败99%的机器学习模型,甚至说标志着手动调参的时代已经结束。

关于它的原理暂且不深入了解了,简单记录下在传统表格任务上的用法,可以作为对数据的初步探索。

- 安装方式:https://auto.gluon.ai/stable/index.html

- 官方教程:https://auto.gluon.ai/stable/tutorials/tabular_prediction/tabular-quickstart.html

1

|

from autogluon.tabular import TabularDataset, TabularPredictor

|

1、准备数据#

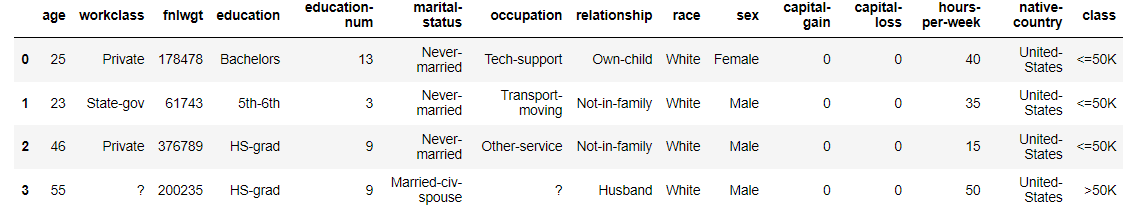

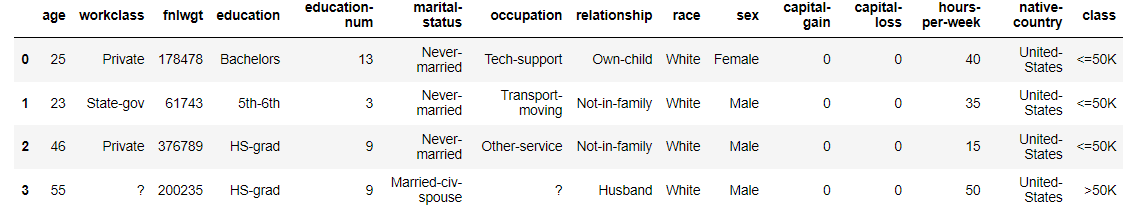

(1)(多列)特征与(1列)target合并在一个表格中,转为autogluon的TabularDataset对象;

(2)每行代表一个样本,须分为训练集与测试集。

1

2

3

4

5

6

7

8

9

10

11

12

13

|

## (1) 方式1: 从csv文件读取

train_data = TabularDataset('https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv')

subsample_size = 500 # 仅为演示、节省时间

train_data = train_data.sample(n=subsample_size, random_state=0)

test_data = TabularDataset('https://autogluon.s3.amazonaws.com/datasets/Inc/test.csv')

type(train_data)

# autogluon.core.dataset.TabularDataset

## (2) 方式2:可由pd.Dataframe对象转换

import pandas as pd

train_df = pd.read_csv("https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv")

train_data = TabularDataset(train_df)

|

2、模型训练#

(1)autogluon可自动根据target列的内容决定是回归还是分类任务;

(2)对于特征列数据,autogluon可自动完成缺失值处理、数据标准化等,无需手动清洗数据。

2.1 基础流程#

1

2

3

4

5

6

7

8

|

#指定target列

label = 'class'

#指定模型保存位置

save_path = 'tmp'

#开始训练

predictor = TabularPredictor(label=label, path=save_path).fit(train_data)

# predictor = TabularPredictor.load(save_path) 加载模型

|

1

2

3

4

5

|

predictor.problem_type #训练任务类型

print(predictor.feature_metadata) #所判断的特征列数据类型

#不同类型模型的训练结果验证集指标排名

predictor.fit_summary()

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

# 不同模型在测试集的指标排名

predictor.leaderboard(test_data, silent=True)

# 最优模型在测试集的多种结果指标

predictor.evaluate(test_data)

##等价于如下步骤

y_test = test_data[label] #真实值

test_data_nolab = test_data.drop(columns=[label])

test_data_nolab.head()

y_pred = predictor.predict(test_data_nolab) #预测值

predictor.evaluate_predictions(y_true=y_test, y_pred=y_pred, auxiliary_metrics=True)

predictor.predict_proba(test_data_nolab) #预测概率

# 使用指定模型预测

predictor.predict(test_data, model='LightGBM')

|

2.2 多种指标#

autogluon对于分类任务,默认使用accuracy指标;对于回归任务,默认使用RMSE指标

其它可选指标包括

(1)分类:

1

2

3

|

['accuracy', 'balanced_accuracy', 'f1', 'f1_macro', 'f1_micro', 'f1_weighted',

'roc_auc', 'roc_auc_ovo_macro', 'average_precision', 'precision', 'precision_macro', 'precision_micro',

'precision_weighted', 'recall', 'recall_macro', 'recall_micro', 'recall_weighted', 'log_loss', 'pac_score']

|

(2)回归

1

2

|

['root_mean_squared_error', 'mean_squared_error', 'mean_absolute_error',

'median_absolute_error', 'mean_absolute_percentage_error', 'r2']

|

如需更多评价指标可参考https://auto.gluon.ai/stable/tutorials/tabular_prediction/tabular-custom-metric.html#sec-tabularcustommetric

- 用于训练时的模型优化:选择一个指标,用于模型优化以及比较

1

2

3

|

metric = 'roc_auc'

predictor = TabularPredictor(label=label, path=save_path, eval_metric=metric).fit(train_data)

predictor.fit_summary()

|

- 用于训练后的模型不同角度的性能比较:可选择多个指标

1

2

|

metrics = ['roc_auc', "f1", "recall", "recall"]

predictor.leaderboard(test_data, extra_metrics = metrics, silent=True)

|

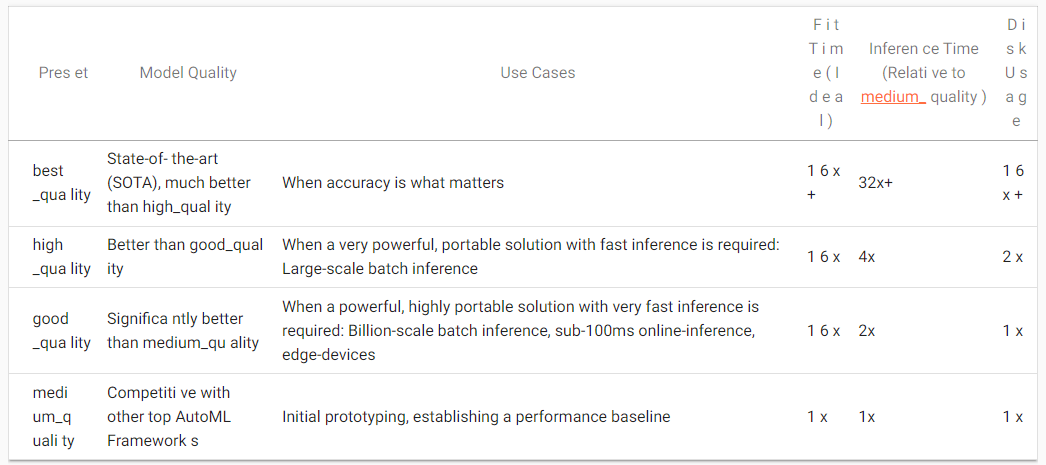

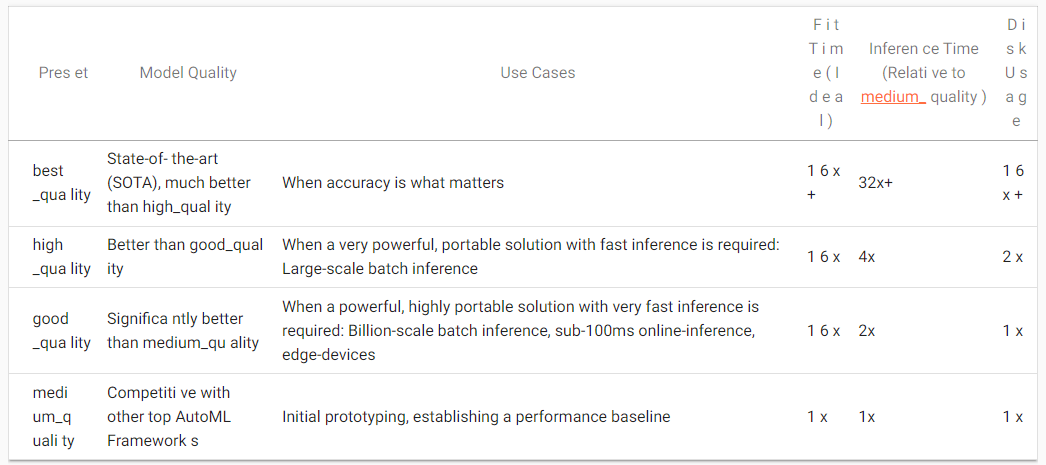

2.3 不同模式#

主要针对 TabularPredictor().fit(train_data, presets=)的presets参数

默认为"medium_quality",其余依次为"good_quality", “high_quality”, “best_quality”

从左到右,代表了训练时间/成本与训练结果性能之间的取舍。

官方教程建议先按默认的medium_quality训练,对模型有初步的了解后,在尝试best_quality

此外fit()函数还包括一个time_limit=参数,单位为秒,直接指定训练的时长。如不指定默认训练完全部可能的模型。

报错记录#

- 1、在best_quality/high_quality训练模式下会可能会出现如下报错:

1

2

3

4

5

6

7

8

9

10

11

12

13

|

OpenBLAS : Program is Terminated. Because you tried to allocate too many memory regions.

This library was built to support a maximum of 128 threads - either rebuild OpenBLAS

with a larger NUM_THREADS value or set the environment variable OPENBLAS_NUM_THREADS to

a sufficiently small number. This error typically occurs when the software that relies on

OpenBLAS calls BLAS functions from many threads in parallel, or when your computer has more

cpu cores than what OpenBLAS was configured to handle.

OpenBLAS : Program is Terminated. Because you tried to allocate too many memory regions.

This library was built to support a maximum of 128 threadPC: @ 0x7ff3b109952c (unknown) dgemm_incopy_SKYLAKEX

@ 0x7ff3b95f0630 (unknown) (unknown)

[2023-05-27 15:40:36,365 E 90917 106900] logging.cc:361: *** SIGSEGV received at time=1685173236 on cpu 136 ***

[2023-05-27 15:40:36,366 E 90917 106900] logging.cc:361: PC: @ 0x7ff3b109952c (unknown) dgemm_incopy_SKYLAKEX

[2023-05-27 15:40:36,366 E 90917 106900] logging.cc:361: @ 0x7ff3b95f0630 (unknown) (unknown)

Fatal Python error: Segmentation fault

|

解决方案参考:https://github.com/autogluon/autogluon/issues/1020 主要原因是KNN模型训练时调用了过多线程,导致超过OpenBLAS所支持的上限。

因此可限制KNN使用的线程数(默认调用机器的全部线程),或者干脆使用KNN模型

1

|

predictor = TabularPredictor(label="label").fit(zuhe_train_Tab,presets="high_quality",excluded_model_types = ['KNN'])

|