1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

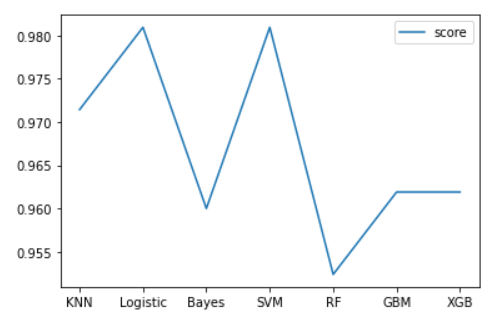

from sklearn.linear_model import LogisticRegression

model_logistic = LogisticRegression()

param_grid = [

{'penalty' : ['l1', 'l2', 'elasticnet', 'none'],

'C' : [0.01, 0.1, 1, 10, 100],

'solver' : ['lbfgs','newton-cg','liblinear','sag','saga'],

'max_iter' : [1000]

}

]

grid_search = GridSearchCV(model_logistic, param_grid, cv=5,

scoring="accuracy", n_jobs=1)

grid_search.fit(train_X, train_y)

print(grid_search.best_params_)

print(grid_search.best_score_)

logistic_grid_search = grid_search

# {'C': 10, 'max_iter': 1000, 'penalty': 'l1', 'solver': 'saga'}

# 0.980952380952381

|